Hi

Thank you for your repley!

I've tried with -machine accel:tcg and I got this below:

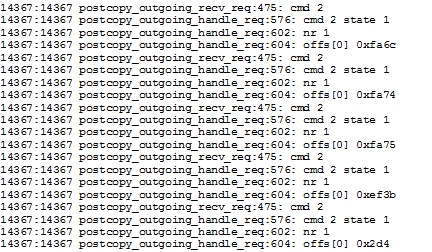

src node:

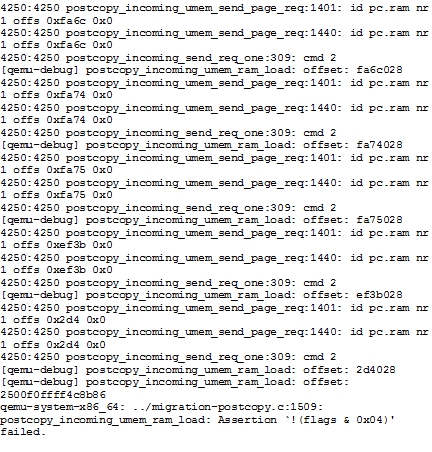

des node:

and sometimes the last line got

I'm wondering that why the umemd.mig_read_fd can be read again without

the des node's page_req

from the last line on src node, we can see it doesn't get page req. But the

des node read the umemd.mig_read_fd again.

I think umemd.mig_read_fd can be read only when the src node send sth to

the socket. Is there any other situation?

BTW can you tell me how does the KVM pv clock make the patches

work incorrectly?

Thanks

Tommy

From: Isaku

Yamahata

Date: 2012-01-16 18:17

To: thfbjyddx

Subject: Re: [Qemu-devel]回??: [PATCH 00/21][RFC] postcopy

live?migration

On Mon, Jan 16, 2012 at 03:51:16PM +0900, Isaku Yamahata wrote:

> Thank you for your info.

> I suppose I found the cause, MSR_KVM_WALL_CLOCK and MSR_KVM_SYSTEM_TIME.

> Your kernel enables KVM paravirt_ops, right?

>

> Although I'm preparing the next path series including the fixes,

> you can also try postcopy by disabling paravirt_ops or disabling kvm

> (use tcg i.e. -machine accel:tcg).

Disabling KVM pv clock would be ok.

Passing no-kvmclock to guest kernel disables it.

> thanks,

>

>

> On Thu, Jan 12, 2012 at 09:26:03PM +0800, thfbjyddx wrote:

> >

> > Do you know what wchan the process was blocked at?

> > kvm_vcpu_ioctl(env, KVM_SET_MSRS, &msr_data) doesn't seem to block.

> >

> > It's

> > WCHAN COMMAND

> > umem_fault------qemu-system-x86

> >

> >

> > ━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━

> > Tommy

> >

> > From: Isaku Yamahata

> > Date: 2012-01-12 16:54

> > To: thfbjyddx

> > CC: t.hirofuchi; qemu-devel; kvm; satoshi.itoh

> > Subject: Re: [Qemu-devel]回??: [PATCH 00/21][RFC] postcopy live?migration

> > On Thu, Jan 12, 2012 at 04:29:44PM +0800, thfbjyddx wrote:

> > > Hi , I've dug more thess days

> > >

> > > > (qemu) migration-tcp: Attempting to start an incoming migration

> > > > migration-tcp: accepted migration

> > > > 4872:4872 postcopy_incoming_ram_load:1018: incoming ram load

> > > > 4872:4872 postcopy_incoming_ram_load:1031: addr 0x10870000 flags 0x4

> > > > 4872:4872 postcopy_incoming_ram_load:1057: done

> > > > 4872:4872 postcopy_incoming_ram_load:1018: incoming ram load

> > > > 4872:4872 postcopy_incoming_ram_load:1031: addr 0x0 flags 0x10

> > > > 4872:4872 postcopy_incoming_ram_load:1037: EOS

> > > > 4872:4872 postcopy_incoming_ram_load:1018: incoming ram load

> > > > 4872:4872 postcopy_incoming_ram_load:1031: addr 0x0 flags 0x10

> > > > 4872:4872 postcopy_incoming_ram_load:1037: EOS

> > >

> > > There should be only single EOS line. Just copy & past miss?

> > >

> > > There must be two EOS for one is coming from postcopy_outgoing_ram_save_live

> > > (...stage == QEMU_SAVE_LIVE_STAGE_PART) and the other is

> > > postcopy_outgoing_ram_save_live(...stage == QEMU_SAVE_LIVE_STAGE_END)

> > > I think in postcopy the ram_save_live in the iterate part can be ignore

> > > so why there still have the qemu_put_byte(f, QEMU_VM_SECTON_PART) and

> > > qemu_put_byte(f, QEMU_VM_SECTON_END) in the procedure? Is it essential?

> >

> > Not so essential.

> >

> > > Can you please track it down one more step?

> > > Which line did it stuck in kvm_put_msrs()? kvm_put_msrs() doesn't seem to

> > > block.(backtrace by the debugger would be best.)

> > >

> > > it gets to the kvm_vcpu_ioctl(env, KVM_SET_MSRS, &msr_data) and never return

> > > so it gets stuck

> >

> > Do you know what wchan the process was blocked at?

> > kvm_vcpu_ioctl(env, KVM_SET_MSRS, &msr_data) doesn't seem to block.

> >

> >

> > > when I check the EOS problem

> > > I just annotated the qemu_put_byte(f, QEMU_VM_SECTION_PART);

> > and qemu_put_be32

> > > (f, se->section_id)

> > > (I think this is a wrong way to fix it and I don't know how it get through)

> > > and leave just the se->save_live_state in the qemu_savevm_state_iterate

> > > it didn't get stuck at kvm_put_msrs()

> > > but it has some other error

> > > (qemu) migration-tcp: Attempting to start an incoming migration

> > > migration-tcp: accepted migration

> > > 2126:2126 postcopy_incoming_ram_load:1018: incoming ram load

> > > 2126:2126 postcopy_incoming_ram_load:1031: addr 0x10870000 flags 0x4

> > > 2126:2126 postcopy_incoming_ram_load:1057: done

> > > migration: successfully loaded vm state

> > > 2126:2126 postcopy_incoming_fork_umemd:1069: fork

> > > 2126:2126 postcopy_incoming_fork_umemd:1127: qemu pid: 2126 daemon pid: 2129

> > > 2130:2130 postcopy_incoming_umemd:1840: daemon pid: 2130

> > > 2130:2130 postcopy_incoming_umemd:1875: entering umemd main loop

> > > Can't find block !

> > > 2130:2130 postcopy_incoming_umem_ram_load:1526: shmem == NULL

> > > 2130:2130 postcopy_incoming_umemd:1882: exiting umemd main loop

> > > and at the same time , the destination node didn't show the EOS

> > >

> > > so I still can't solve the stuck problem

> > > Thanks for your help~!

> > > ━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━

> > ━

> > > Tommy

> > >

> > > From: Isaku Yamahata

> > > Date: 2012-01-11 10:45

> > > To: thfbjyddx

> > > CC: t.hirofuchi; qemu-devel; kvm; satoshi.itoh

> > > Subject: Re: [Qemu-devel]回??: [PATCH 00/21][RFC] postcopy live migration

> > > On Sat, Jan 07, 2012 at 06:29:14PM +0800, thfbjyddx wrote:

> > > > Hello all!

> > >

> > > Hi, thank you for detailed report. The procedure you've tried looks

> > > good basically. Some comments below.

> > >

> > > > I got the qemu basic version(03ecd2c80a64d030a22fe67cc7a60f24e17ff211) and

> > > > patched it correctly

> > > > but it still didn't make sense and I got the same scenario as before

> > > > outgoing node intel x86_64; incoming node amd x86_64. guest image is on nfs

> > > >

> > > >

> > I think I should show what I do more clearly and hope somebody can figure out

> > > > the problem

> > > >

> > > > ・ 1, both in/out node patch the qemu and start on 3.1.7 kernel with umem

> > > >

> > > > ./configure --target-list=

> > > x86_64-softmmu --enable-kvm --enable-postcopy

> > > > --enable-debug

> > > > make

> > > > make install

> > > >

> > > > ・ 2, outgoing qemu:

> > > >

> > > > qemu-system-x86_64 -m 256 -hda xxx -monitor stdio -vnc: 2 -usbdevice tablet

> > > > -machine accel=kvm

> > > > incoming qemu:

> > > > qemu-system-x86_64 -m 256 -hda xxx -postcopy -incoming tcp:0:8888 -monitor

> > > > stdio -vnc: 2 -usbdevice tablet -machine accel=kvm

> > > >

> > > > ・ 3, outgoing node:

> > > >

> > > > migrate -d -p -n tcp:(incoming node ip):8888

> > > >

> > > > result:

> > > >

> > > > ・ outgoing qemu:

> > > >

> > > > info status: VM-status: paused (finish-migrate);

> > > >

> > > > ・ incoming qemu:

> > > >

> > > > can't type any more and can't kill the process(qemu-system-x86)

> > > >

> > > > I open the debug flag in migration.c migration-tcp.c migration-postcopy.c:

> > > >

> > > > ・ outgoing qemu:

> > > >

> > > > (qemu) migration-tcp: connect completed

> > > > migration: beginning savevm

> > > > 4500:4500 postcopy_outgoing_ram_save_live:540: stage 1

> > > > migration: iterate

> > > > 4500:4500 postcopy_outgoing_ram_save_live:540: stage 2

> > > > migration: done iterating

> > > > 4500:4500 postcopy_outgoing_ram_save_live:540: stage 3

> > > > 4500:4500 postcopy_outgoing_begin:716: outgoing begin

> > > >

> > > > ・ incoming qemu:

> > > >

> > > > (qemu) migration-tcp: Attempting to start an incoming migration

> > > > migration-tcp: accepted migration

> > > > 4872:4872 postcopy_incoming_ram_load:1018: incoming ram load

> > > > 4872:4872 postcopy_incoming_ram_load:1031: addr 0x10870000 flags 0x4

> > > > 4872:4872 postcopy_incoming_ram_load:1057: done

> > > > 4872:4872 postcopy_incoming_ram_load:1018: incoming ram load

> > > > 4872:4872 postcopy_incoming_ram_load:1031: addr 0x0 flags 0x10

> > > > 4872:4872 postcopy_incoming_ram_load:1037: EOS

> > > > 4872:4872 postcopy_incoming_ram_load:1018: incoming ram load

> > > > 4872:4872 postcopy_incoming_ram_load:1031: addr 0x0 flags 0x10

> > > > 4872:4872 postcopy_incoming_ram_load:1037: EOS

> > >

> > > There should be only single EOS line. Just copy & past miss?

> > >

> > >

> > > > from the result:

> > > > It didn't get to the "successfully loaded vm state"

> > > > So it still in the qemu_loadvm_state, and I found it's in

> > > > cpu_synchronize_all_post_init->kvm_arch_put_registers->kvm_put_msrs and got

> > > > stuck

> > >

> > > Can you please track it down one more step?

> > > Which line did it stuck in kvm_put_msrs()? kvm_put_msrs() doesn't seem to

> > > block.(backtrace by the debugger would be best.)

> > >

> > > If possible, can you please test with more simplified configuration.

> > > i.e. drop device as much as possible i.e. no usbdevice, no disk...

> > > So the debug will be simplified.

> > >

> > > thanks,

> > >

> > > > Does anyone give some advises on the problem?

> > > > Thanks very much~

> > > >

> > > > ━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━

> > ━

> > > ━

> > > > Tommy

> > > >

> > > > From: Isaku Yamahata

> > > > Date: 2011-12-29 09:25

> > > > To: kvm; qemu-devel

> > > > CC: yamahata; t.hirofuchi; satoshi.itoh

> > > > Subject: [Qemu-devel] [PATCH 00/21][RFC] postcopy live migration

> > > > Intro

> > > > =====

> > > > This patch series implements postcopy live migration.[1]

> > > > As discussed at KVM forum 2011, dedicated character device is used for

> > > > distributed shared memory between migration source and destination.

> > > > Now we can discuss/benchmark/compare with precopy. I believe there are

> > > > much rooms for improvement.

> > > >

> > > > [1] http://wiki.qemu.org/Features/PostCopyLiveMigration

> > > >

> > > >

> > > > Usage

> > > > =====

> > > > You need load umem character device on the host before starting migration.

> > > > Postcopy can be used for tcg and kvm accelarator. The implementation depend

> > > > on only linux umem character device. But the driver dependent code is split

> > > > into a file.

> > > > I tested only host page size ==

> > guest page size case, but the implementation

> > > > allows host page size != guest page size case.

> > > >

> > > > The following options are added with this patch series.

> > > > - incoming part

> > > > command line options

> > > > -postcopy [-postcopy-flags <flags>]

> > > > where flags is for changing behavior for benchmark/debugging

> > > > Currently the following flags are available

> > > > 0: default

> > > > 1: enable touching page request

> > > >

> > > > example:

> > > > qemu -postcopy -incoming tcp:0:4444 -monitor stdio -machine accel=kvm

> > > >

> > > > - outging part

> > > > options for migrate command

> > > > migrate [-p [-n]] URI

> > > > -p: indicate postcopy migration

> > > > -n: disable background transferring pages: This is for benchmark/

> > debugging

> > > >

> > > > example:

> > > > migrate -p -n tcp:<dest ip address>:4444

> > > >

> > > >

> > > > TODO

> > > > ====

> > > > - benchmark/evaluation. Especially how async page fault affects the result.

> > > > - improve/optimization

> > > > At the moment at least what I'm aware of is

> > > > - touching pages in incoming qemu process by fd handler seems suboptimal.

> > > > creating dedicated thread?

> > > > - making incoming socket non-blocking

> > > > - outgoing handler seems suboptimal causing latency.

> > > > - catch up memory API change

> > > > - consider on FUSE/CUSE possibility

> > > > - and more...

> > > >

> > > > basic postcopy work flow

> > > > ========================

> > > > qemu on the destination

> > > > |

> > > > V

> > > > open(/dev/umem)

> > > > |

> > > > V

> > > > UMEM_DEV_CREATE_UMEM

> > > > |

> > > > V

> > > > Here we have two file descriptors to

> > > > umem device and shmem file

> > > > |

> > > > | umemd

> > > > | daemon on the destination

> > > > |

> > > > V create pipe to communicate

> > > > fork()---------------------------------------,

> > > > | |

> > > > V |

> > > > close(socket) V

> > > > close(shmem) mmap(shmem file)

> > > > | |

> > > > V V

> > > > mmap(umem device) for guest RAM close(shmem file)

> > > > | |

> > > > close(umem device) |

> > > > | |

> > > > V |

> > > > wait for ready from daemon <----pipe-----send ready message

> > > > | |

> > > > | Here the daemon takes over

> > > > send ok------------pipe---------------> the owner of the socket

> > > > | to the source

> > > > V |

> > > > entering post copy stage |

> > > > start guest execution |

> > > > | |

> > > > V V

> > > > access guest RAM UMEM_GET_PAGE_REQUEST

> > > > | |

> > > > V V

> > > > page fault ------------------------------>page offset is returned

> > > > block |

> > > > V

> > > > pull page from the source

> > > > write the page contents

> > > > to the shmem.

> > > > |

> > > > V

> > > > unblock <-----------------------------UMEM_MARK_PAGE_CACHED

> > > > the fault handler returns the page

> > > > page fault is resolved

> > > > |

> > > > | pages can be sent

> > > > | backgroundly

> > > > | |

> > > > | V

> > > > | UMEM_MARK_PAGE_CACHED

> > > > | |

> > > > V V

> > > > The specified pages<-----pipe------------request to touch pages

> > > > are made present by |

> > > > touching guest RAM. |

> > > > | |

> > > > V V

> > > > reply-------------pipe-------------> release the cached page

> > > > | madvise(MADV_REMOVE)

> > > > | |

> > > > V V

> > > >

> > > > all the pages are pulled from the source

> > > >

> > > > | |

> > > > V V

> > > > the vma becomes anonymous<----------------UMEM_MAKE_VMA_ANONYMOUS

> > > > (note: I'm not sure if this can be implemented or not)

> > > > | |

> > > > V V

> > > > migration completes exit()

> > > >

> > > >

> > > >

> > > > Isaku Yamahata (21):

> > > > arch_init: export sort_ram_list() and ram_save_block()

> > > > arch_init: export RAM_SAVE_xxx flags for postcopy

> > > > arch_init/ram_save: introduce constant for ram save version = 4

> > > > arch_init: refactor host_from_stream_offset()

> > > > arch_init/ram_save_live: factor out RAM_SAVE_FLAG_MEM_SIZE case

> > > > arch_init: refactor ram_save_block()

> > > > arch_init/ram_save_live: factor out ram_save_limit

> > > > arch_init/ram_load: refactor ram_load

> > > > exec.c: factor out qemu_get_ram_ptr()

> > > > exec.c: export last_ram_offset()

> > > > savevm: export qemu_peek_buffer, qemu_peek_byte, qemu_file_skip

> > > > savevm: qemu_pending_size() to return pending buffered size

> > > > savevm, buffered_file: introduce method to drain buffer of buffered

> > > > file

> > > > migration: export migrate_fd_completed() and migrate_fd_cleanup()

> > > > migration: factor out parameters into MigrationParams

> > > > umem.h: import Linux umem.h

> > > > update-linux-headers.sh: teach umem.h to update-linux-headers.sh

> > > > configure: add CONFIG_POSTCOPY option

> > > > postcopy: introduce -postcopy and -postcopy-flags option

> > > > postcopy outgoing: add -p and -n option to migrate command

> > > > postcopy: implement postcopy livemigration

> > > >

> > > > Makefile.target | 4 +

> > > > arch_init.c | 260 ++++---

> > > > arch_init.h | 20 +

> > > > block-migration.c | 8 +-

> > > > buffered_file.c | 20 +-

> > > > buffered_file.h | 1 +

> > > > configure | 12 +

> > > > cpu-all.h | 9 +

> > > > exec-obsolete.h | 1 +

> > > > exec.c | 75 +-

> > > > hmp-commands.hx | 12 +-

> > > > hw/hw.h | 7 +-

> > > > linux-headers/linux/umem.h | 83 ++

> > > > migration-exec.c | 8 +

> > > > migration-fd.c | 30 +

> > > > migration-postcopy-stub.c | 77 ++

> > > > migration-postcopy.c |

> > > 1891 +++++++++++++++++++++++++++++++++++++++

> > > > migration-tcp.c | 37 +-

> > > > migration-unix.c | 32 +-

> > > > migration.c | 53 +-

> > > > migration.h | 49 +-

> > > > qemu-common.h | 2 +

> > > > qemu-options.hx | 25 +

> > > > qmp-commands.hx | 10 +-

> > > > savevm.c | 31 +-

> > > > scripts/update-linux-headers.sh | 2 +-

> > > > sysemu.h | 4 +-

> > > > umem.c | 379 ++++++++

> > > > umem.h | 105 +++

> > > > vl.c | 20 +-

> > > > 30 files changed, 3086 insertions(+), 181 deletions(-)

> > > > create mode 100644 linux-headers/linux/umem.h

> > > > create mode 100644 migration-postcopy-stub.c

> > > > create mode 100644 migration-postcopy.c

> > > > create mode 100644 umem.c

> > > > create mode 100644 umem.h

> > > >

> > > >

> > > >

> > >

> > > --

> > > yamahata

> > >

> > >

> >

> > --

> > yamahata

> >

> >

>

> --

> yamahata

>

--

yamahata