Hello Devs from the QEMU Community,

I’m Trilok Bhattacharya, a postgraduate student at the University of Manchester, working on my dissertation project that involves investigating the effects of snapshot streaming to the I/O throughput, and mitigating

them.

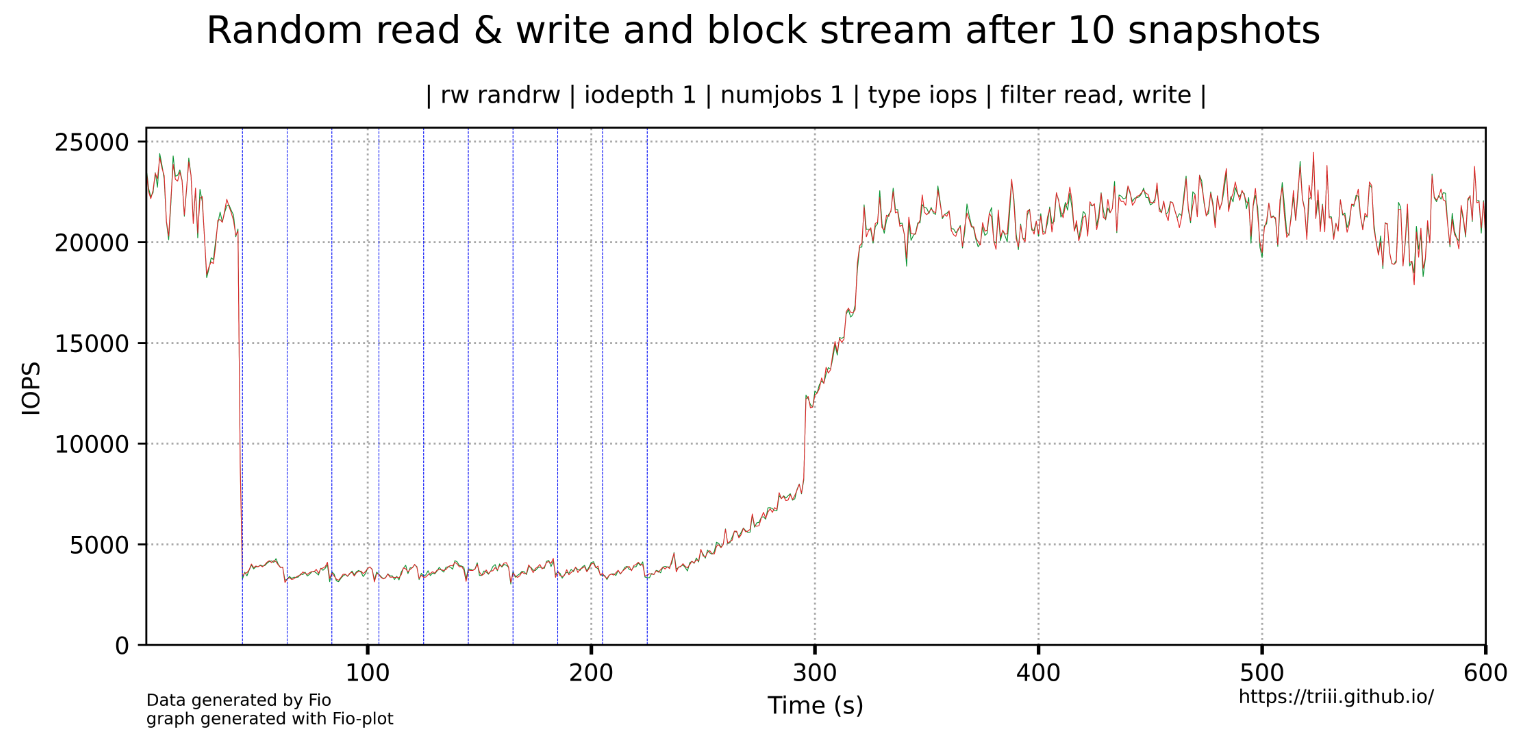

While working on the same, I captured the benchmarks of a standalone disk of size 50G (qcow2) on an Ubuntu-18.04 VM using FIO, and taking snapshots at regular fixed intervals.

The test VM is launched with a bash script as below –

VM_LOCATION=${1:-$HOME/projects/vms/disks/ub-18.04_50G.qcow2}

QEMU=/usr/local/bin/qemu-system-x86_64

NETWORK_ARGS="-nic user,hostfwd=tcp:127.0.0.1:1122-:22"

SERIAL="-daemonize"

sudo $QEMU -smp 4 -m 4G \

-drive id=drive0,if=none,format=qcow2,file=$VM_LOCATION,cache=none,aio=threads,discard=unmap,overlap-check=constant,l2-cache-size=8M \

-device virtio-blk-pci,drive=drive0,id=virtio0 \

--accel kvm \

-display none \

$NETWORK_ARGS \

-chardev socket,path=qemu-ga-socket,server=on,wait=off,id=qga0 \

-device virtio-serial \

-device virtserialport,chardev=qga0,name=org.qemu.guest_agent.0 \

-qmp unix:qemu-qmp-socket,server,nowait \

-monitor unix:qemu-monitor-socket,server,nowait \

-D qemu.log \

$SERIAL

The fio configuration file is –

[global]

size=10g

direct=1

iodepth=64

ioengine=libaio

bs=16k

directory=/mnt/data

log_avg_msec=1000

numjobs=1

runtime=600

time_based=1

[writer1]

rw=randrw

write_iops_log=/tmp/results/randrw-iodepth-1-numjobs-1_iops

Snapshots (10) are taken at an interval of 20 seconds, after an initial wait of 15 seconds.

Command to take snapshots –

"snapshot_blkdev $block_device $snapshot_dir/$name" | sudo socat – UNIX-CONNECT:$socket_location/qemu-monitor-socket

Upon generating a graph for the fio numbers, it produces this –

(Blue lines represent the moment a snapshot is taken)

It is noticed that there is a sharp dip in the I/O throughput as soon as snapshots are taken, and the dip continues for more than 100 seconds,

until the IO goes back to “normal” again.

I have tried finding the root cause for this drop in performance, but could not find anything significant. I have been looking for some explanations

to the above behaviour, or some directions so that I can investigate on the same.

It will be really beneficial to receive some help from the community, and advance my project.

Looking forward to hearing back.

Sincerely,

Trilok